Introduction

“Everything invented in the past 150 years will be reinvented using AI within the next 15 years”. Unlike any other technology, the ethics and governance of AI has become a key concern as a result of its decision-making ability.Social diversity, equity and inclusion are considered as key success factors to mitigate risks in AI while driving social justice. Sustainability has become a broad and complex topic for AI. Many organizations (government, not-for-profits, charities & NGOs) have their diversified strategies driving AI for both business optimization and social justice. The partnerships and collaborations became important more than ever as diversified and distributed data is the source of AI while bias is the key risk. Therefore, because of the scope, diversity and complexity of the applications in AI, the importance of an abstraction framework for simplifying and generalizing AI governance is apparent.

Complexity in AI Governance

The AI spectrum is quite broad. From IoT sensor management to smart city development, different stakeholders should look into different perspectives such as social justice, strategy, technology, sustainability, ethics, policies, regulations, compliance, etc. Moreover, things get even more complex when different perspectives are entangled. For example.

- Environmental and Social: AI has been identified as a key enabler on 79% (134 targets) of United Nations (UN) Sustainable Development Goals (SDGs). However, 35% (59 targets) may experience a negative impact from AI. While the environment gets the highest potential, the society gets the most negative impact by AI and create social concerns,

- Environmental and Technology: Cloud computing is promising with the availability and scalability of resources in data centres. With emerging telecommunication technologies (e.g., 5G), the energy consumption when transferring data from IoT/edge devices to the cloud became a concern on carbon footprint and sustainability. This energy concern is a factor that shifts the technology landscape from cloud computing to fog computing,

- Economic and Sustainability: Businesses are driving AI hoping it can contribute about 15.7 trillion to the world economy by 2030. On the other hand, the UN SDGs are also planned to achieve by 2030 in the areas critically important for humanity and the planet. The synergy between AI economic and sustainability strategies will be essential,

- Economic and Social: Businesses are driving AI hoping it can contribute about 15.7 trillion to the world economy by 2030. However, the research found from now until the year 2022, 85% of AI projects will fail due to bias in data, algorithms, or the teams responsible for managing them. Therefore, AI ethics and governance for the sustainability of AI became a key success factor in economic goals in AI,

- Economic and Ethical: Still no government has been able to pass AI law except ethical frameworks or regulatory guidelines. Therefore, on our way to economic prosperity, there are many emerging AI risks for humanity such as autonomous weapons, automation-spurred job loss, socioeconomic inequality, bias caused by data and algorithms, privacy violations and deepfakes.

On the other hand, the complex differences in AI applications don't necessarily mean there are no similarities in other perspectives such as cultural values, community or strategy. For example, similar organizations may work on different sustainability goals for social justice. Such differences in AI strategy should not obstruct the partnership and collaboration opportunities between them.

KITE Abstraction Framework

The Australian Red Cross is driving AI for sustainability by mobilizing the power of humanity. Our AI strategy is to enhance social justice by mitigating AI risks and driving AI benefits for sustainability. Recently we have developed a productive AI ethics and governance framework for supporting sustainable AI initiatives in the industry such as IFRC volunteering and partnerships on emerging technologies, UN 17 SDGs, The Red Cross Strategy 2030 and social DEI (Diversity, Equity and Inclusion) in AI.

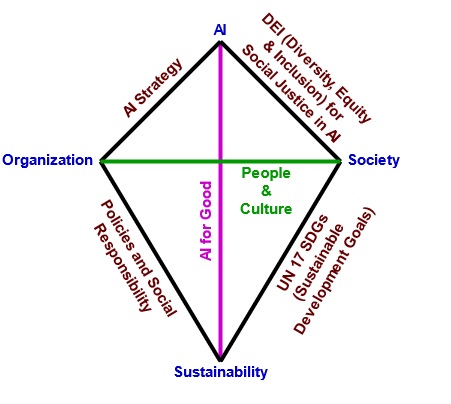

Based on our experience in AI for sustainability, we are presenting this novel abstraction framework aka KITE for reducing the aforesaid complexity in AI governance. The overview of the framework is illustrated by the following diagram.

Irrespective of the complexity of the AI application, this framework focuses on 4 dimensions:

- AI,

- Organization,

- Society,

- Sustainability,

to understand the stakeholders, strategy, social justice and sustainable impact. As shown in the diagram, the framework analyses the synergy and social impact of AI with organizational, social and sustainability perspectives. The interdependent perspectives enable evaluation of motivations for AI ethics and good governance, AI for good, AI for sustainability, and social diversity and inclusion in the AI strategies and initiatives. In our experience, this framework enables organizations to systematically engage with the community, volunteers and partners for collaborating towards ethical and sustainable AI for social justice. It hides the application-specific complexities in AI and generalizes the key success factors (KSF) of AI initiatives where stakeholders can easily understand their responsibilities on sustainability and social justice. These key success factors include but are not limited to social DEI (Diversity, Equity and Inclusion), SDGs (sustainable development goals), strategy, ethics and governance in AI. Moreover, this framework supports mitigating AI risks related to biases in various aspects including bias in data, algorithms, and the people involved in AI.