National Quality Assurance Framework1

In accordance with a request of the Statistical Commission at its fortieth session (E/2009/24), the Secretary General has the honour to transmit the report of Statistics Canada, containing a programme review on quality assurance frameworks. Based on a global consultation process, it presents a review of current quality concepts, frameworks and tools. It further proposes to develop a generic National Quality Assessment Framework (NQAF), describes its basic elements and suggests a process to formulate such a generic NQAF for the Commission to adopt at its 2011 session. The Commission is invited to express its views on the substance of the report and the recommendations for future work in this area.

Table of contents

A. Background and Motivation

B. Terminology

II. Current Quality Concepts, Frameworks and Tools

A. Quality Concepts

B. Quality Policies and Strategies

C. Generic Quality Tools

D. Quality Assurance Frameworks for International Organisations

E. NSO Quality Tools

III. Benefits, Content and Structure of a Generic NQAF

A. Benefits of a NQAF

B. Scope of a National Quality Assurance Framework

C. Development of a Generic NQAF

IV. Points for discussion

Annex 1 Quality Assurance Framework at Statistics Canada

References

I. Introduction

A. Background and Motivation

1. Quality is a central concern for the production of official statistics. A number of countries and international organizations have developed detailed concepts and procedures of quality control. Whilst there is considerable overlap in these various quality frameworks, there exists as yet no generic internationally agreed national quality assessment framework (NQAF) which could be used by a country to systematically introduce new quality assurance procedures or to systematize its existing procedures. Such a generic NQAF would have to be based on a broad consensus regarding the notion of quality and build on the experiences developed so far.

2. The present programme review is a first step in this direction. The international landscape, describing quality concepts, frameworks and tools that have been developed to date, is presented in Section II of this paper. The third section outlines the benefits of a NQAF, the case for a generic version, and the steps required to make it an international agreed framework. Some specific experiences of Canada are presented in Annex 1.

Motivation

3. To fulfill its mandate, a National Statistical Office (NSO) must deliver three things to its citizenry in an exemplary manner2: a) information that responds to the country’s evolving, highest priority needs of the future (program relevance); b) information that is representative of the world it seeks to describe (product quality); and c) information that is produced at the lowest possible cost (efficiency).

4. Relevance and quality are intrinsically linked. In the absence of a strong pro-active effort both decrease over time. The economies and societies we are attempting to measure are changing at an unprecedented pace. We need to close the “relevance gap” between the information we produce and the priority needs of our users.

5. Similarly, a “quality gap” has opened. Household and business respondents are less and less willing to participate in surveys, while their life styles and technology make it increasingly difficult to contact them. The critical tools and computer systems needed to produce information deteriorate unless they are maintained. Unprecedented demographic changes within the workforce represent an additional pressure for many NSOs. In some cases, these pressures have manifested themselves in the form of errors in some critical data releases.

6. In a context of limited budgets, quality has to be managed well. Trade-offs have to be considered. In order to do so quality indicators have to be developed to describe the various aspects of quality and to facilitate an overall assessment of quality at the aggregate level. In particular, there is a need to develop objective composite quality indicators, aggregating its various elements. The purpose would be to achieve the twin objectives of: having an overall understanding of quality; and having the information needed to establish a balance in meeting the relevance and quality needs in a constrained budget environment. Realizing that such measures may not always be feasible, a substitute could be subjective expert assessments of aggregate quality.

7. Some aspects of quality can be characterized as “static” in the sense that they are of constant concern and tend to evolve relatively slowly. Other aspects are “dynamic” in the sense that the attention they require may rapidly increase or decrease with changes in the NSO’s environment. Because many aspects of quality are dynamic and deteriorate without pro-active effort there is a continuing need to invest in quality simply in order to maintain the status quo. The situation is evolving and needs are changing more than ever before. Thus, the role of quality within the overall management of an NSO must be examined continuously.

B. Terminolgy

8. A NSO is involved in a range of statistical processes, including sample surveys, censuses, administrative data collections, production of price and other economic indices, and statistical compilations like the national accounts and balance of payments. In this paper, for brevity, and in keeping with a convention adopted by several countries, these processes are all referred to generically as surveys. This is an extension of the more usual meaning of survey. The term survey program is used to mean a group of surveys within a domain, and the term statistical program is reserved for the complete suite of surveys within a NSO as distinct from a survey program.

9. The term quality is interpreted in a broad sense, encompassing all aspects of how well statistical processes and statistical output fulfil user and stakeholder expectations. Good quality is not just meeting users needs but also addressing respondent concerns regarding the reporting burden and confidentiality and ensuring institutional environment is impartial, objective, comprising sound methodology and cost-effective procedures.

10. A national quality assurance framework (NQAF) is assumed targeted at roughly the same organisational level as the quality management system described in the well known ISO 9000 series of quality standards, but tailored to the specific context of a NSO. It focuses on management of core statistical functions rather than on human resources and communications. It encompasses quality guidelines, referring not only to good practices but also the mechanisms by which they can be implemented.

II. Current Quality Concepts, Frameworks and Tools

A. Quality Concepts

11. Creation and use of a NQAF takes place within the more general context of quality management. This subsection outlines the development of international quality concepts. The following subsections summarise existing quality tools.

12. The most widely used quality standard in the world is ISO 9000 Quality Management System series. ISO 9000 expresses total quality management (TQM) principles as follows:

- Customer focus: an organisation depends upon its customers and thus must understand and strive to meet their needs; customers are central in determining what constitutes good quality; quality is what is perceived by customers rather than by the organisation.

- Leadership and constancy of purpose: leaders establish unity of purpose and direction of an organisation; they must create and maintain an internal environment that enables staff to be fully involved in achieving the organisation’s objectives; quality improvements require leadership and sustained direction.

- Involvement of people: people at all levels are the essence of an organisation; their full involvement enables their abilities to be fully used.

- Process approach: managing activities and resources as a process is efficient; any process can be broken down into a chain of sub processes, for which the output of one process is the input to the next.

- Systems approach to management: identifying, understanding and managing processes as a system contributes to efficiency and effectiveness.

- Continual improvement: continual improvement should be a permanent objective of an organisation.

- Factual approach to decision making: effective decisions are based on the analysis of information and data.

- Mutually beneficial supplier relationships: an organisation and its suppliers are interdependent and a mutually beneficial relationship enhances both.

13. However, as stated in the introduction of ISO 9001, the design and implementation of an organisation’s quality management system (QMS) is influenced by varying needs, particular objectives, the products provided, the processes employed and the size and structure of the organisation. It is not the intent of the standard to imply uniformity in the structure of QMSs or uniformity of documentation. In other words, optimum use of a standard for a particular organisation or group of similar organisations implies interpreting the standard as required to deal with the specific context.

14. For NSOs the context may be characterized as follows.

- NSOs are government not private enterprise. They are not profit based. They supply data to non-paying users rather than to paying customers. For the most part, the users cannot influence quality through purchase decisions.

- Some of the users are actually internal users, for example the national accounts is a user of data from numerous surveys as well as a producer.

- The primary inputs are typically data from individual enterprises, households and persons, whether collected directly or through administrative processes.

- The core production processes are transformations of these individual data into aggregate data and their assembly into statistical products.

- The primary products (typically called outputs) are statistics and accompanying services.

15. ISO 20256 ISO 20252:2006 Market, Opinion, Social Research, Vocabulary and Service Requirements published in 2006 goes part way to providing a quality standard better suited to NSOs than the ISO 9000 series. ISO 20256 places considerable emphasis on the need for a quality management system. However, the standard is not entirely appropriate, being aimed at commercial rather than government organisations. Furthermore, it has only recently become available. Thus, since the mid 1990s, the statistical community – NSOs and statistical divisions/ directorates in international organisations compiling statistics – have been developing quality management tools ranging from quality concepts, policies and models to detailed sets of quality procedures and indicators, as summarised in the following subsections.

B. Quality Policies and Strategies

16. In 1994, the UN Statistical Commission (UNSC) provided guidance on the sort of environment within which quality management can flourish by promulgating the UN Fundamental Principles of Official Statistics for national statistical systems. Whilst none of the ten principles refers to quality explicitly, they are all basic to a quality management system. Subsequently, in 2005, the UN Committee for the Coordination of Statistical Activities promulgated an equivalent set of principles for international organisations compiling statistics - UN Principles Governing International Statistical Activities.

17. In December 1993 Eurostat announced its mission “to provide the European Union with a high quality statistical information service". In 1995 it established a Working Group (WG) on Assessment of Quality in Statistics for business statistics with membership from European Member State NSOs. The EU Statistical Programme Committee (SPC) subsequently extended the WG role to cover all statistics, and more recently broadened it further by dropping “assessment of”. In 1999 the SPC established the Leadership Expert Group (LEG) on Quality chaired by Statistics Sweden. The LEG was enormously influential in defining and promoting quality awareness and initiatives within the European Statistical System (ESS) and outside. It presented 22 recommendations at the 2001 International Conference on Quality in Official Statistics in Stockholm and delivered a final report to the European Commission in 2002. The recommendations have served as the basis for many subsequent developments in Eurostat and EU Member States.

18. The LEG drafted the ESS Quality Declaration, which was adopted by the ESS Statistical Programme Committee in 2001 as a formal step towards total quality management in the ESS. The Quality Declaration comprises the ESS mission statement, the ESS vision statement and ten principles based on the UN Fundamental Principles but tailored to the ESS context. It served as the basis for subsequent formulation of the European Statistics Code of Practice (CoP) promulgated by the European Commission in 2005. The CoP commits Eurostat and the NSOs in the EU Member States to a common and comprehensive approach to production of high quality statistics. It builds upon the ESS definition of quality and comprises fifteen key principles, covering the institutional environment, statistical processes and outputs. For each principle, the CoP defines a set of indicators reflecting good practice and providing a basis for assessment. CoP Compliance by Member State NSOs has since been measured both by self assessment and by peer review.

19. The new Regulation on European statistics (Reg. (EC) No. 223/2009, commonly known as the statistical law) was adopted by the European Parliament and Council on 11 March 2009. It contains broad provisions relating to quality and ethics, thus providing a framework for ESS quality assurance and reporting far beyond the previous Council Reg. (EC) No. 322/97 on Community Statistics. The main changes comprise a reference to the CoP, a new Article (Article 12) defining eight quality criteria, dealing with the definition of quality targets and minimum standards, and emphasising ESS quality reporting.

C. Generic Quality Tools

20. Following the LEG recommendations, Eurostat and European NSOs developed a rich suite of generic quality tools. The ESS Standard for Quality Reports, which was promulgated in 2003 and updated in 2009, provides recommendations for the preparation of comprehensive quality reports by NSOs and Eurostat units. The ESS Handbook for Quality Reports also updated in 2009 provides more details and examples. Both documents contain the most recent version of the set of the ESS Standard Quality and Performance Indicators for use in summarizing the quality of statistical outputs.

21. The ESS Quality Glossary first published in 2003, covers many technical terms in ESS quality documentation, providing a short definition of each term and indicating the source of the definition. A more comprehensive and up to date glossary is the Metadata Common Vocabulary, developed by a partnership of international organisations including Eurostat.

22. The European Self Assessment Checklist for Survey Managers (DESAP) enables the conduct of quick but systematic and comprehensive quality assessments of a survey and its outputs and identification of potential improvements. There is an Electronic Version of Self Assessment Checklist with a Electronic Version User Guide.

23. The ESS Handbook on improving quality by analysis of process variables describes a general approach and useful tools for identifying, measuring and analysing key process variables. The ESS Handbook on Data Quality - Assessment Methods and Tools details the full range of methods for assessing process and output quality and the tools that support them.

24. Over the past few years Eurostat has been developing a quality barometer, with the aim of summarising the performance of the ESS as a whole. More specifically, its objectives are:

- to measure the evolution of the data quality across domains and over time;

- to identify good practices and structural weaknesses;

- to provide better management information through a common monitoring framework.

25. In principle the quality barometer is constructed from the values of the standard set of quality and performance indicators for each statistical process (survey) for each country reporting to Eurostat. The problem is that these data are not available for all processes for all countries - quality reports do not exist for some statistical processes, quality reporting requirements have not been fully harmonised, and existing quality reporting information is not always updated on a regular basis. Thus, current work is focused on refining the new set of ESS quality and performance indicators and ensuring they are consistent with the emerging European metadata structure (ESMS). The general idea is that, once the ESMS has been implemented, it will provide the basis for obtaining the data needed for the quality barometer without adding additional reporting burden.

26. Generic quality tools have also been developed by other international statistical organizations. Of these probably the best known is the IMF’s Data Quality Assessment Framework (DQAF). It was first developed in 2001 by the IMF Statistics Department. Its aim is to complement the quality dimension of the IMF’s Special Data Dissemination Standard (SDDS) and General Data Dissemination System (GDDS) and to underpin assessment of the quality of the data provided by countries as background for the IMF’s Reports on the Observance of Standards and Codes. It is designed for use by IMF staff and NSOs to assess the quality of specific types of national datasets (i.e., surveys, used in the broad sense of this document), presently covering the national accounts, consumer price index, producer price index, government financial statistics, monetary statistics, balance of payments, and external debt.

27. The DQAF is a process oriented quality assessment tool. It provides a structure for comparing existing practices against best practices using five dimensions of data quality - integrity, methodological soundness, accuracy and reliability, serviceability, and accessibility - plus the so-called prerequisites for data quality. It identifies 3-5 elements of good practice for each dimension and several indicators for each element. Further, in the form of a multi-level framework, it enables datasets to be assessed concretely and in detail through focal issues and key points. The first three levels of the framework are generic, i.e., applicable to all datasets (DQAF July 2003), the lower levels are specific to each type of dataset.

D. Quality Assurance Frameworks for International Organisations

28. This section briefly introduces three publicly available documents describing QAFs for international organisations compiling statistics. The documents are geared to the specific characteristics of such organisations, namely that:

- statistics production is secondary to the primary aims of the organisation and belongs to a unit (department, division, etc.) within the organisation;

- the institutional structures of the organisations are quite different; and

- statistics are principally or entirely compiled using data supplied by NSOs, or other organisations, not data directly collected from businesses and households.

29. Nevertheless, except in the realm of direct data collection, the documents contain many useful ideas for the construction of a generic NQAF.

- The OECD Quality Framework and Guidelines were developed in 2002 and published in 2003. Output data quality is defined in terms of seven dimensions - relevance; accuracy; credibility; timeliness; accessibility; interpretability; and coherence. Credibility is an addition to the usual set of dimensions, reflecting the key role that user and stakeholder perceptions play in OECD context. Another factor specifically taken into account in the framework is cost-efficiency.

- Eurostat has developed its own QAF (latest version December 2008) in accordance with the draft generic Guidelines (previous sub-section). The Eurostat QAF views quality assurance as comprising five basic elements: documentation, standardisation of processes and statistical methods, quality measurement, strategic planning and control, and improvement actions. The Eurostat QAF defines four assessment types, which in increasing order of complexity are: Self assessment, Supported self-assessment, Peer review, Rolling Review.

- The European Central Bank Statistics Quality Framework (ECB SQF) was produced in 2008. It sets forth the main quality principles and elements guiding the production of ECB statistics. These principles refer to ‘institutional environment’, ‘statistical processes’ and ‘statistical outputs’. Specific Quality Assurance Procedures cover the following areas: ‘Programming activities and development of new statistics’, ‘Confidentiality protection’, ‘Data collection’, ‘Compilation and statistical analysis’, ‘Data accessibility and dissemination policy’, ‘Monitoring and reporting’, ‘Monitoring and reinforcing the satisfaction of key stakeholders’.

- Since 2005 the United Nations Committee for the Coordination of Statistical Activities (CCSA) has supported a project on the use and convergence of QAFs for international organisations with the aim of ensuring that their current and future quality activities are well integrated.

E. NSO Quality Tools

30. Individual NSOs have also developed numerous quality policies, standards and tools for their own purposes. At Statistics Canada, for example, the Compendium of Methods of Error Evaluation in Surveys was produced in 1978, followed by the Quality Guidelines (1985), expansion of the Policy on Informing Users of Data Quality and Methodology (1986), and the first version of a formal Quality Assurance Framework (1997). The Quality Guidelines were subsequently revised in 1987, 1998 and 2003, and the fifth revision is scheduled for 2009. The Quality Assurance Framework was revised in 2002.

31. Over the same period many other NSOs were involved in similar quality initiatives, especially the production of quality guidelines. Examples are the Office for National Statistics’ Guidelines for Measuring Statistical Quality, Statistics Finland’s Quality Guidelines for Official Statistics, and the Australian Bureau of Statistics’ National Statistical Service Handbook. In the U.S. statistical agencies have been obliged to comply with the Office of Management and Budget’s 2001 Quality Guidelines and with its 2006 Standards and Guidelines for Statistical Surveys. The resulting quality documentation includes:

- the Bureau of Labor Statistics’ Guidelines for Informing Users of Information Quality and Methodology;

- the Census Bureau’s Quality Standards and Quality Performance Principles; and

- the National Center for Health Statistics’ Guidelines for Ensuring the Quality of Information Disseminated to the Public.

32. Statistics Canada’s Quality Assurance Framework is descriptive in the sense that it situates existing quality related policies and practices within a common framework rather than imposing new policies or practices. It notes that the various measures it describes do not necessarily apply uniformly to every survey and that it is the responsibility of managers to determine which measures are appropriate. It is intended for reference and training purposes. In summary, the document provides valuable insights into what could be included in a generic NQAF. More details are provided in the annex 1.

III. Benefits, Content and Structure of a Generic NQAF

A. Benefits of a NQAF

33. Many NSOs are involved in a comprehensive range of quality initiatives and activities but without an over-arching framework to give them context and to explain relationships between them and the various quality tools. For this reason some NSOs have adopted the ISO 9000 standard on Quality Management Systems as the umbrella for their quality work. As demonstrated by Statistics Canada, a NQAF is such an umbrella, providing a single place to record or reference the full range of quality concepts, policies and practices.

- It provides a systematic mechanism for ongoing identification and resolution of quality problems, maximising the interaction between staff across the NSO.

- It gives greater transparency to the processes by which quality is assured and reinforces the image of the NSO as a credible provider of good quality statistics.

- It provides a basis for creating and maintaining a quality culture. It is a valuable source of reference material for training.

34. The process of developing an NQAF is typically best carried out by an NSO task force with experienced staff drawn from a range of areas – program planning, survey design, survey operations, dissemination, infrastructure development and support. Thus, the development process has intrinsic benefits in its own right as it obliges staff to come together from their various disciplines in confronting quality issues and thinking through requirements.

35. Establishing a common template for the various national approaches would facilitate communication about quality assurance and lead to a consensus about what should be included in a National Quality Assurance Framework.

B. Scope of a National Quality Assurance Framework

36. There is a good deal of overlap, even confusion, between the various terms such as quality management, total quality management, quality management system, quality assurance framework, quality assurance, quality guidelines, quality evaluation, quality measurement, quality assessment, quality reporting, etc. This paper takes the view that a national quality assurance framework (NQAF) is:

- narrower than total quality management in that it puts more focus on management of core statistical functions rather than on management of human resources and communications;

- less detailed than quality guidelines in that it summarises good practices, rather than describing them;

- broader than quality guidelines in that it refers to the mechanisms by which good practices can be implemented and to the institutional environment; and

- targeted at roughly the same organisational level as the quality management system described in the ISO 9000 series, but tailored to the specific context of a national statistical office (NSO) whose role is to collect data and produce statistical products;

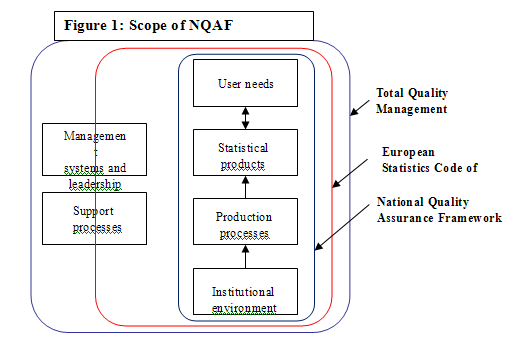

37. A NQAF should address not only individual surveys but also the complete statistical program, and the infrastructure supporting it. Its scope is illustrated in Figure 1 with reference to the elements covered by total quality management and the European Statistics Code of Practice.

38. The review of quality policies, models, procedures and guidelines summarised in Sections II suggests that a NQAF should contain:

- Context – the situation in which the document has been developed, its purpose, and its relationship to other policies, frameworks and procedures, sometimes referencing or incorporated in the NSO mission, vision and values;

- Quality policy – a short statement by senior management indicating the nature and extent of its commitment to quality management;

- Quality model – a definition of what is meant by quality, elaborated in terms of output quality and process dimensions/components;

- Quality objectives, standards and guidelines – target quality objectives together with international or local standards and guidelines adopted by the organisation;

- Quality assurance procedures - part of, or embedded in, the production processes to the extent possible;

- Quality measurement procedures - specifically including a set of quality and performance indicators, with procedures for collecting the corresponding data values being embedded in the production processes to the extent possible;

- Quality assessment procedures – sometimes incorporated in the quality assurance procedures, more frequently conducted on a periodic basis, for example based on a self-assessment checklist such as the European DESAP checklist;

- Quality improvement procedures – continual improvement and re-engineering initiatives specific to the NSO.

C. Development of a Generic NQAF

39. The arguments in favour of developing and promoting a standard (generic) NQAF are that it would:

- provide a stimulus to NSOs that do not have an over-arching quality framework to introduce one;

- provide a basis for NSOs that already have such a framework to consider ways in which it could be enhanced;

- be a means of sharing good practices; and

- reduce NSOs costs in NQAF creation and implementation.

How can such a generic NQAF best be developed?

40. The European Standard for Quality Reports provides an example of a fairly detailed standard that can be applied by NSOs. It is relatively easy to imagine a generic set of quality guidelines being constructed along the same lines. However, whereas a quality reporting standard and quality guidelines deal largely with statistical techniques, a NQAF has more focus on the organisation of an NSO and the environment within which the techniques are applied. Organisational set-up and environment are likely to differ far more from one NSO to another than a set of statistical techniques. Thus, as noted in Eurostat’s Guidelines for Implementation of QAFs for International Organisations Compiling Statistics, it is quite difficult to imagine a generic one fit all NQAF.

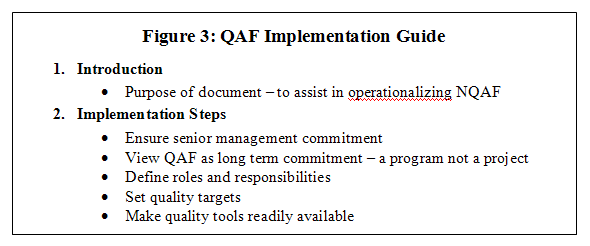

41. A less ambitious and more realistic target is the notion of NQAF template that provides the general structure within which individual NQAFs can be developed. The template can be more or less detailed depending upon how much material can be reasonably assumed common across NSOs. To be useful and not to put additional burden on NSOs, a generic template should allow NSOs to easily map their quality approaches along the various headings. A first draft proposal for such a generic template is presented in Figure 2, accompanied by an implementation template in Figure 3, which is predominantly output oriented following mainly the output quality dimensions.

IV. Points for discussion

42. The Statistical Commission is invited to discuss:

(ii) the proposed quality template presented in this document;

(iii) the proposed adoption of the NQAF in 2011.

Figure 2: Generic National Quality Assurance Framework (NQAF)

1. Introduction

- Current circumstances and key issues driving need for quality management.

- Benefits of QAF.

- Relationship to other statistical office policies, strategies and frameworks.

- Content of QAF (that is, of rest of document).

- Existing quality policies, models, objectives and procedures.

- Role of QAF - where the QAF fits in the quality toolkit.

- Managing user and stakeholder relationships – user satisfaction surveys, feedback mechanisms, councils.

- Managing relevance – program review, planning process, data analysis.

- Managing accuracy – design, accuracy assessment, quality control, revision policy.

- Managing timeliness and punctuality – advanced release dates, preliminary/final releases.

- Managing accessibility – product definition, dissemination practices, search facilities.

- Managing interpretability/clarity – concepts, sources, methods, informing users of quality.

- Managing coherence and comparability – standards, harmonized concepts and methods.

- Managing output quality tradeoffs – especially relevance, accuracy and timeliness.

- Managing provider relationships – response burden measurement and reduction, response rate maintenance.

- Managing statistical infrastructure – standards, registers, policies including confidentiality, security, transparency.

- Managing metadata – relating to quality.

- Quality indicators – defining, collecting, analysing, synthesizing – composite indicators, quality barometer/dashboard.

- Quality targets – setting and monitoring.

- Quality assessment program – self-assessment, peer review, labeling/certification

- Performance management – planning, cost and efficiency, sharing good practices.

- Continuous improvement program – quality culture, ongoing enhancements within operating budgets.

- Governance structure - for quality and performance trade-offs and reengineering initiatives, based on results of quality assessments

- Summary of benefits.

- Reference to Implementation Guide.

Annex 1: Quality Assurance Framework at Statistics Canada

Annex in pdf

References

Australian Bureau of Statistics NSS Handbook, Australian Bureau of Statistics, http://www.nss.gov.au/nss/home.nsfBureau of Labor Statistics (2002) BLS Guidelines for Informing Users of Information Quality and Methodology, Bureau of Labor Statistics, http://www.bls.gov/bls/quality.htm

Eurostat (2001) Quality Declaration of the European Statistical System, http://epp.eurostat.ec.europa.eu/pls/portal/docs/PAGE/PGP_DS_QUALITY/TAB47141301/DECLARATIONS.PDF

Eurostat (2002) Quality in the European Statistical System – The Way Forward, European Commission and Eurostat, Luxembourg.

Eurostat (2003) DESAP - The European Self Assessment Checklist for Survey Managers, European Commission and Eurostat, http://epp.eurostat.ec.europa.eu/pls/portal/docs/PAGE/PGP_DS_QUALITY/TAB47143233/G0-LEG-20031010-EN.PDF

Eurostat (2005) European Statistics Code of Practice, European Commission and Eurostat, http://epp.eurostat.ec.europa.eu/pls/portal/docs/PAGE/PGP_DS_QUALITY/TAB47141301/VERSIONE_INGLESE_WEB.PDF

Eurostat (2007a) Handbook on Data Quality Assessment Methods and Tools, European Commission and Eurostat, Wiesbaden.

Eurostat (2007b), European Statistical System - Code of Practice Peer Reviews: The National Statistical Institute's guide, European Commission and Eurostat, Luxembourg.

Eurostat (2009), ESS Standard for Quality Reports (2009 edition), Methodologies and Working Papers, Eurostat, Luxembourg.

International Monetary Fund (2003) Data Quality Assessment Framework and Data Quality Program, International Monetary Fund, http://www.imf.org/external/np/sta/dsbb/2003/eng/dqaf.htm

International Monetary Fund (2000) General Data Dissemination System, International Monetary Fund, http://dsbb.imf.org/Applications/web/getpage/?pagename=gddswhatgdds

National Center for Health Statistics (2007) NCHS Guidelines for Ensuring the Quality of Information Disseminated to the Public, National Center for Health Statistics, http://www.cdc.gov/nchs/about/quality.htm

Office for Economic Cooperation and Development (2003) Quality Framework and Guidelines for OECD Statistical Activities, Paris, OECD Document STD/QFS(2003)1.

Office of Management and Budget (2001) Guidelines for Ensuring and Maximizing the Quality, Objectivity, Utility, and Integrity of Information Disseminated by Federal Agencies, Office of Management and Budget, http://www.whitehouse.gov/omb/assets/omb/fedreg/reproducible2.pdf

Office of Management and Budget (2006) Standards and Guidelines for Statistical Surveys, Office of Management and Budget, http://www.whitehouse.gov/omb/inforeg/statpolicy/standards_stat_surveys.pdf

Office for National Statistics (2006) Guidelines for Measuring Statistical Quality, Office for National Statistics, London, 2006, http://www.statistics.gov.uk/downloads/theme_other/Guidelines_Subject.pdf

Sheikh, M. (2009) “A Long-Term Vision for Statistics Canada”, Statistics Canada internal document, March 2009.

Statistics Canada (1978) “Policy on Informing Users of Data Quality and Methodology”, Statistics Canada Policy Manual, March 12 1978.

Statistics Canada (1994). Draft Policy on Data Quality Criteria in the Dissemination of Statistical Information, September 1994. Internal document.

Statistics Canada (2000). Guidelines for Seasonal Adjustment and Trend-Cycle Estimation, March 2000. Internal document.

Statistics Canada (2002a) Statistics Canada’s Quality Assurance Framework, Publication 12-586-XIE.

Statistics Canada (2002b). Standards and Guidelines on the Presentation of Data in Tables of Statistical Publications, November 1990. Internal document.

Statistics Canada (2003) Quality Guidelines (Fourth Edition), Publication 12-539-XIE.

Statistics Canada (2007) Quality Assurance Review: Summary Report, Publication 12-594-XWE, June 2007.

Statistics Finland (2007), Quality Guidelines for Official Statistics, 2nd Revised Edition, ISBN 978–952–467–743–1

UNECE Secretariat (2008) “Generic Statistical Business Process Model: Version 3.1 – December 2008”, Joint UNECE/Eurostat/OECD Work Session on Statistical Metadata (METIS).

United Nations (2004) Fundamental Principles of Official Statistics, United Nations, http://unstats.un.org/unsd/statcom/doc04/2004-21e.pdf

U.S. Bureau of the Census-a, U.S. Census Bureau Quality Standards, United States Bureau of the Census, http://www.census.gov/quality/quality_standards.htm

U.S. Bureau of the Census -b, U.S. Census Bureau Quality Performance Principles, United States Bureau of the Census, http://www.census.gov/quality/performance_principles.htm

Comment on the entire document

Comment on the entire document