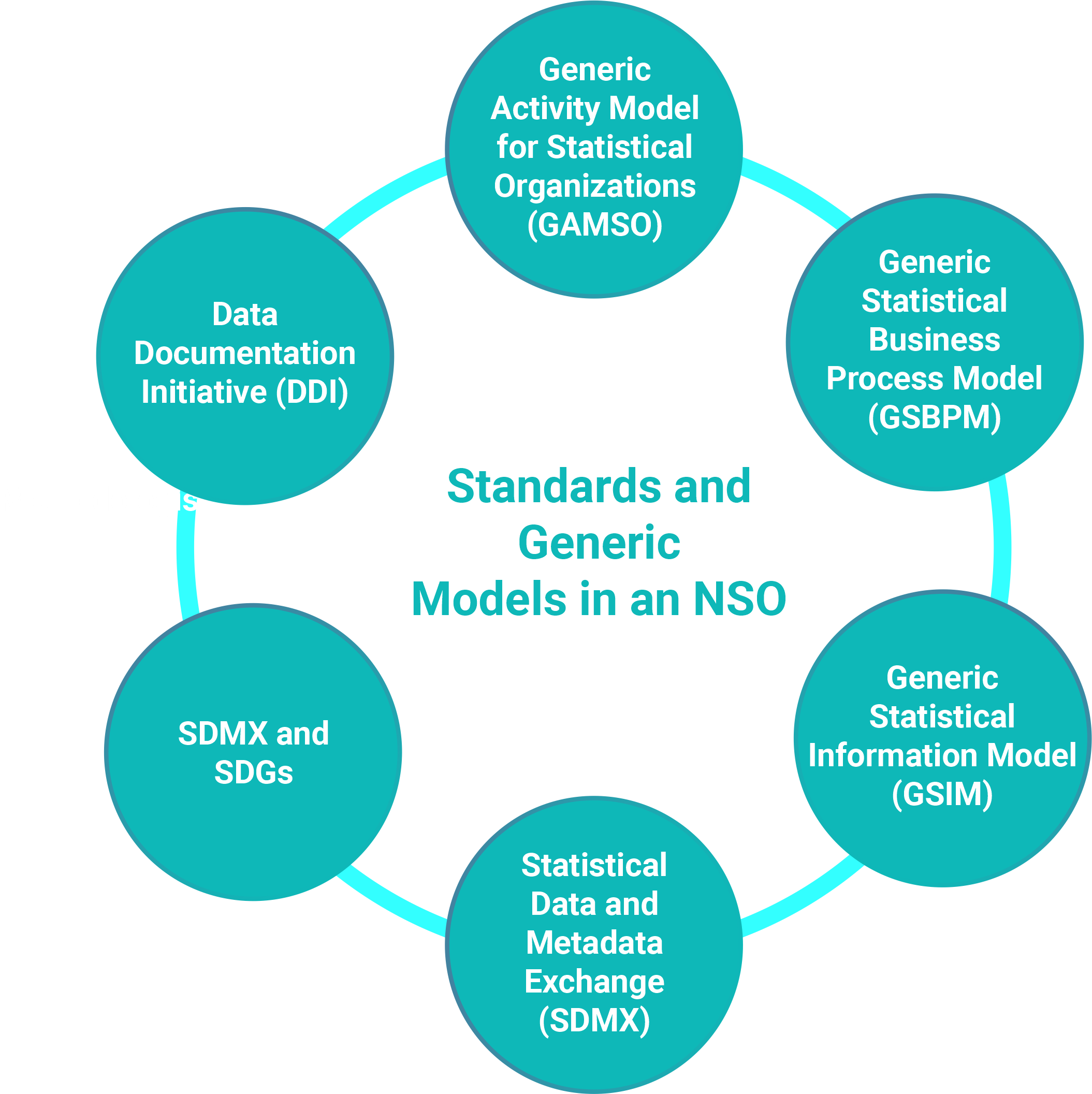

14.4 Use of standards and generic models in an NSO

14.4.1 The importance of standards

Standards are enablers of modernisation - by using common standards, statistical systems can be modernised and “industrialised” allowing internationally comparable statistics to be produced more efficiently. Standards facilitate the sharing of data and technology in the development of internationally shared solutions which generate economies of scale.

Standards are expected to provide benefits in the following areas:

■ Efficiency: Standards offer per definition re-usable solution patterns, they are a means to capture knowledge and promote best practices, allowing interested parties to re-use solutions without having to support a full investment.

■ Quality and trust: Standards help to build trust by providing widely validated and accepted solutions to common problems.

■ Collaboration and competition: Standards often provide a common language which can support collaboration among organizations and at the same time stimulate competition among service providers by reducing dependency and vendor lock-in that may be associated with particular solutions.

■ Innovation: Standards foster innovation as they offer a solid basis for developments of all nature by formalising knowledge and best practices.

A number of major statistical standards are in use today, while others are emerging and maturing.

14.4.2 Generic Activity Model for Statistical Organizations (GAMSO)

The Generic Activity Model for Statistical Organizations (GAMSO) covers activities at the highest level of the statistics organization. It describes and defines the activities that take place within a typical organization that produces official statistics. GAMSO was launched in 2015 and extends and complements the Generic Statistical Business Process Model (GSBPM) by adding additional activities beyond business processes that are needed to support statistical production. GAMSO is also described in more detail in Chapter 5.5.4 — The Generic Activity Model for Statistical Organizations.

The GAMSO activities specifically concerned with IT management cover coordination and management of information and technology resources and solutions. They include the management of the physical security of data and shared infrastructures:

■ Manage IT assets and services;

■ Manage IT security;

■ Manage technological change.

■ Modernstats – the Generic Activity Model for Statistical Organizations ().

14.4.3 Generic Statistical Business Process Model (GSBPM)

The Generic Statistical Business Process Model (GSBPM) is a statistical model that provides a standard terminology for describing the different steps involved in the production of official statistics. GSBPM can be considered the "Production" part of GAMSO. Since its launch in 2009, it has become widely adopted in NSOs and other statistical organizations. GSBPM allows an NSO to define, describe and map statistical processes in a coherent way, thereby making it easier to share expertise. GSBPM is part of a wider trend towards a process-oriented approach rather than one focused on a particular subject-matter topic. GSBPM is applicable to all activities undertaken by statistical organizations which lead to statistical output. It accommodates data sources such as administrative data, register-based statistics, and also Census and mixed sources.

GSBPM covers the processes that cover specifying needs, survey design, building products, data collection, data processing, analysis, dissemination, and evaluation. Within each process, there are a number of sub-processes. These are described in detail in Chapter 5.5.5 — Definition of an integrated production system and in Chapter 14.4.3 — Generic Statistical Business Process Model (GSBPM).

GSBPM can play an important role in the modernisation of the statistical system, especially concerning the statistical project cycle, and can accommodate emerging issues in data collection such as the introduction of mobile data collection and Big Data.

■ Modernstats – the Generic Statistical Business Process Model ().

■ Modernstats – the GSBPM resources repository ().

14.4.4 Generic Statistical Information Model (GSIM)

The Generic Statistical Information Model (GSIM) standard was launched in 2012 and describes the information objects and flows within a statistical business process. GSIM is complementary to GSBPM, and the framework enables descriptions of the definition, management and use of data and metadata throughout the statistical information process.

GSIM is a conceptual model, and the information objects are grouped into four broad categories: Business; Production; Structures; and Concepts. It provides a set of standardized information objects, inputs and outputs in the design and production of statistics, regardless of the subject matter. By using GSIM, an NSO is able to analyse how their business could be more efficiently organized.

As with the other standards, GSIM helps improve communication by providing a common vocabulary for conversations between different business and IT roles, between different subject matter domains and between NSOs at national and international levels. This common vocabulary contributes towards the creation of an environment for reuse and sharing of methods, components and processes and the development of common tools. GSIM also allows an NSO to understand and map common statistical information and processes and the roles and relationships between other standards such as SDMX and DDI.

■ Modernstats – the Generic Statistical Information Model ().

■ Statistics Finland Project to adopt the GSIM Classification model ().

■ Statistics Sweden project to incorporate GSIM in the information architecture and the information models ().

14.4.5 Statistical Data and Metadata Exchange (SDMX)

The Statistical Data and Metadata Exchange (SDMX) standard for statistical data and metadata access and exchange was established in 2000 under the sponsorship of seven international organizations (IMF, World Bank, UNSD, Eurostat, BIS, ECB and OECD).

The importance of a standard for statistical data exchange is well known and cannot be underestimated. The labour-intensive nature of data collection and dissemination mapping to different formats is a problem well known to an NSO, and in the context of the timely transmission of SDG indicators, it has become even more vital.

SDMX is a standard for both content and technology that standardises statistical data and metadata content and structure. SDMX facilitates data and metadata exchange between an NSO and international organizations – and also within a national statistical system. SDMX aims to reduce the reporting burden for data providers and provide faster and more reliable data and metadata sharing. Using SDMX facilitates the standardisation of IT applications and infrastructure and can improve the harmonisation of statistical business processes. There is much reusable software available to implement SDMX in an NSO which can reduce development and maintenance costs with shared technology and know-how.

SDMX ensures data quality as it incorporates data validation into its data structures and validation rules as well as with the many tools made freely available with the standard as part of its open-source approach. SDMX is an ISO standard1 and has been adopted by the UNSC as the preferred standard for data exchange.

Thanks to its provision of a common Information Model, SDMX greatly facilitates reuse of software products and components. Software products such as SDMX Reference Infrastructure have made it possible to establish SDMX APIs by connecting to dissemination databases and mapping their structures to DSDs. This enables an NSO and international agencies to establish standards-based APIs without any software development involved, thus greatly lowering the barrier to entry. Furthermore, tools exist and are widely used that enable implementation of SDMX for data sharing without any dissemination infrastructure in place, by utilizing, e.g., Microsoft Excel or CSV files. This has greatly sped up the adoption of standards-based data sharing by statistical offices.

SDMX tools are not limited to data exchange or dissemination, and as they mature, are increasingly used to automate various business processes along the statistical production chain in conjunction with other standards such as CSPA and DDI.

14.4.6 SDMX and SDGs

A specific SDG indicator data structure (Data Structure Definition) will be used to report and disseminate the indicators at national and international levels. SDMX compliance has been built into a number of internationally used dissemination platforms such as the African Information Highway, the IMF web service and the OECD.Stat platform to ensure efficient transmission of SDG Indicator data and metadata.

■ SDMX official website ().

■ The SDMX Content-Oriented Guidelines (COG) recommend practices for creating interoperable data and metadata sets using the SDMX technical standards. The guidelines are applicable to all statistical domains and focus on harmonising concepts and terminology that are common to a large number of statistical domains.

■ Inventory of software tools for SDMX () implementers and developers which have been developed by organizations involved in the SDMX initiative and external actors.

■ Eurostat main SDMX page ().

■ European Statistical System’s SDMX standards for metadata reporting ().

■ Guidelines for managing an SDMX design project ().

■ The SDMX Global Registry () is the technical infrastructure containing publicly available metadata material, data structure definitions (DSD), and related artefacts (concept schemes, metadata structure definitions, code lists, etc.).

■ The SDMX Starter Kit () - a resource for an NSO wishing to implement the SDMX technical standards and content-oriented guidelines for the exchange and dissemination of aggregate data and metadata.

■ The African Development Bank Open Data for Africa () project.

■ The IMF SDMX Central () project allows users to Validate, Convert, Tabulate, and Publish data to the IMF SDMX Central.

14.4.7 Data Documentation Initiative (DDI)

The Data Documentation Initiative (DDI) is an international standard for describing metadata from surveys, questionnaires, statistical data files, and social sciences study-level information. DDI focuses on microdata and tabulation of aggregates/indicators.

DDI is a membership-based alliance of NSOs, international organizations, academia and research bodies. The DDI specification provides a format for content, exchange, and preservation of questionnaire and data file information. It fills a need related to the challenge of storing and distributing social science metadata, creating an international standard for the design of metadata about a dataset.

In many NSOs, the exact processing in the production of aggregate data products is not well documented. DDI can be used to describe the processing of data in a detailed way to document each step of a process. In this way DDI can be used not just as documentation but can help use metadata to automate throughout the entire process, thus creating “metadata-driven” systems. In this way, DDI can also act as the institutional memory of an NSO.

DDI is a standard that promotes greater process efficiency in the “industrialised” production of statistics. DDI can be also be used for facilitating microdata access as well as for register data.

■ DDI Specifications ().

■ Paper - DDI and SDMX are complementary, not competing, standards ().

■ Guidelines on Mapping Metadata between SDMX and DDI ().